Code | Datasets

Featured

Datasets

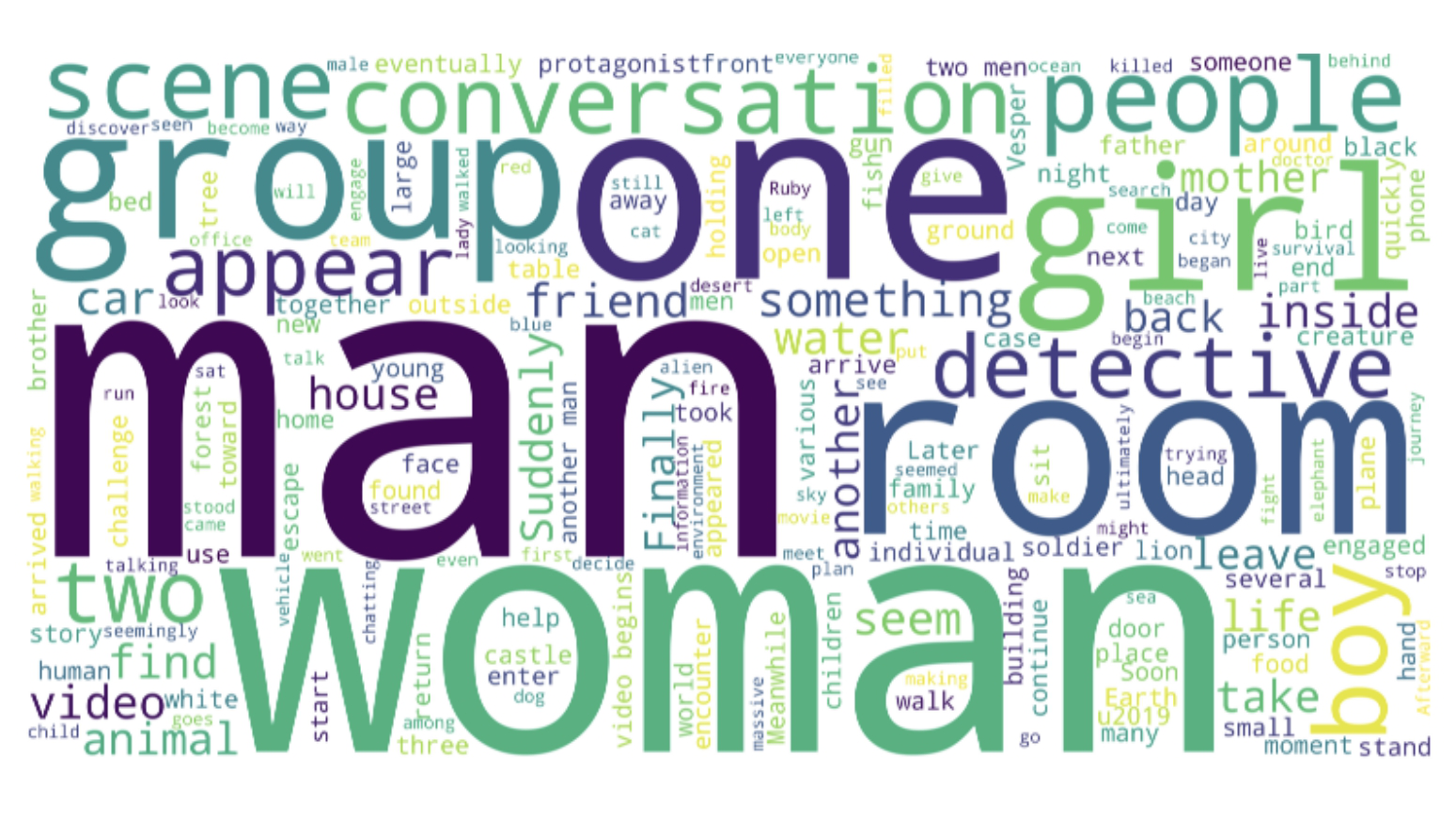

VDC

The first benchmark for detailed video captioning, featuring over one thousand videos with significantly longer and more detailed captions.

View more

MovieChat

A manually labeled long video QA and caption dataset, contains 1,000 video, for each longer than ten thousands frames.

View more

VFD-2000

A video fight detection dataset collected from YouTube, contains 2,000 video clips in diverse scenarios.

View more