About

Wenhao Chai

Docs (updated on 02/15/2025):

CV

|

Research Statement

|

Slides

Research:

Google Scholar

Social Media:

Twitter

|

Instagram

|

LinkedIn

|

Hugging Face

Wenhao Chai is currently a graduate student at University of Washington, with Information Processing Lab advised by Prof. Jenq-Neng Hwang. Previously, he was an undergradate student at Zhejiang University, with CVNext Lab advised by Prof. Gaoang Wang. He is fortunate to work with Prof. Christopher D Manning at Stanford University, and have worked with Prof. Saining Xie and Prof. Yilun Du. He has internship at Pika Labs and Microsoft Research Asia. His research primarily in large multimodal models (LMMs) for video understanding, embodied agent, and generative models. He has published related papers in top-tier conferences and journals such as ICLR, CVPR, ICCV, ECCV, and AAAI. He has also organized workshops and tutorials at CVPR and AAAI, and served as a reviewer for NeurIPS, ICLR, ICML, CVPR, ECCV, AAAI, AISTATS, and IJCV.

Check Out

News and Highlights

- To junior master/undergraduate students: if you would like to chat about life, career plan, or research ideas related to AI/ML, feel free to send me zoom / google meet invitation via email ( wchai@uw.edu ) to schedule a meeting. I will dedicate at least 30 mins every week for such meetings. I encourage students from underrepresented groups to reach out.

- We are hosting Discord server among professors and students for arXiv daily sharing and research discussion. Join us.

- 2025 Fall CS Ph.D. application record.

- 04/2025: We are hosting CVPR 2025 Video Understanding Challenge @ LOVEU.

- 01/2025: Two papers accepted to ICLR 2025.

- 12/2024: Two papers accepted to AAAI 2025.

- 07/2024: Two papers accepted to ECCV 2024.

- 06/2024: One technique report accepted to CVPR 2024 workshop @ NTIRE.

- 06/2024: I am working with Pika Labs as intern to develop next-generation video understanding and generation models.

- 05/2024: One paper accepted to CVPR 2024 workshop @ Embodied AI.

- 04/2024: We are hosting CVPR 2024 Long-form Video Understanding Challenge @ LOVEU.

- 04/2024: Invited talk at AgentX seminar about our STEVE series works.

- 03/2024: One paper accepted to ICLR 2024 workshop @ LLM Agents.

- 02/2024: Two papers accepted to CVPR 2024 with one highlight (2.81%).

- 02/2024: Invited talk at AAAI 2024 workshop @ IMAGEOMICS.

- 12/2023: One paper accepted to AAAI 2024.

- 09/2023: One paper accepted to ICCV 2023 workshop @ TNGCV-DataComp.

- 07/2023: Two papers accepted to ICCV 2023.

Recent

Projects

* Equal contribution. † Project lead. ‡ Corresponding author.

AuroraCap: Efficient, Performant Video Detailed Captioning and a New Benchmark

Wenhao Chai†, Enxin Song, Yilun Du, Chenlin Meng, Vashisht Madhavan, Omer Bar-Tal, Jenq-Neng Hwang, Saining Xie, Christopher D. Manning

International Conference on Learning Representations (ICLR), 2025

Project Page

|

Paper

|

Video

|

Model

|

Benchmark

|

Leaderboard

|

Poster

|

Code

AuroraCap is a multimodal LLM designed for image and video detailed captioning. We also release VDC, the first benchmark for detailed video captioning.

SAMURAI: Adapting Segment Anything Model for Zero-Shot Visual Tracking with Motion-Aware Memory

Cheng-Yen Yang, Hsiang-Wei Huang, Wenhao Chai, Zhongyu Jiang, Jenq-Neng Hwang‡

arXiv preprint, 2024

Project Page

|

Paper

|

Video

|

Raw Result

|

Code

SAMURAI is a zero-shot visual tracking framework that adapts Segment Anything Model (SAM) for visual tracking with motion-aware memory.

StableVideo: Text-driven Consistency-aware Diffusion Video Editing

Wenhao Chai, Xun Guo‡, Gaoang Wang, Yan Lu

International Conference on Computer Vision (ICCV), 2023

Project Page

|

Paper

|

Video

|

Demo

|

Code

We tackle introduce temporal dependency to existing text-driven diffusion models, which allows them to generate consistent appearance for the new objects.

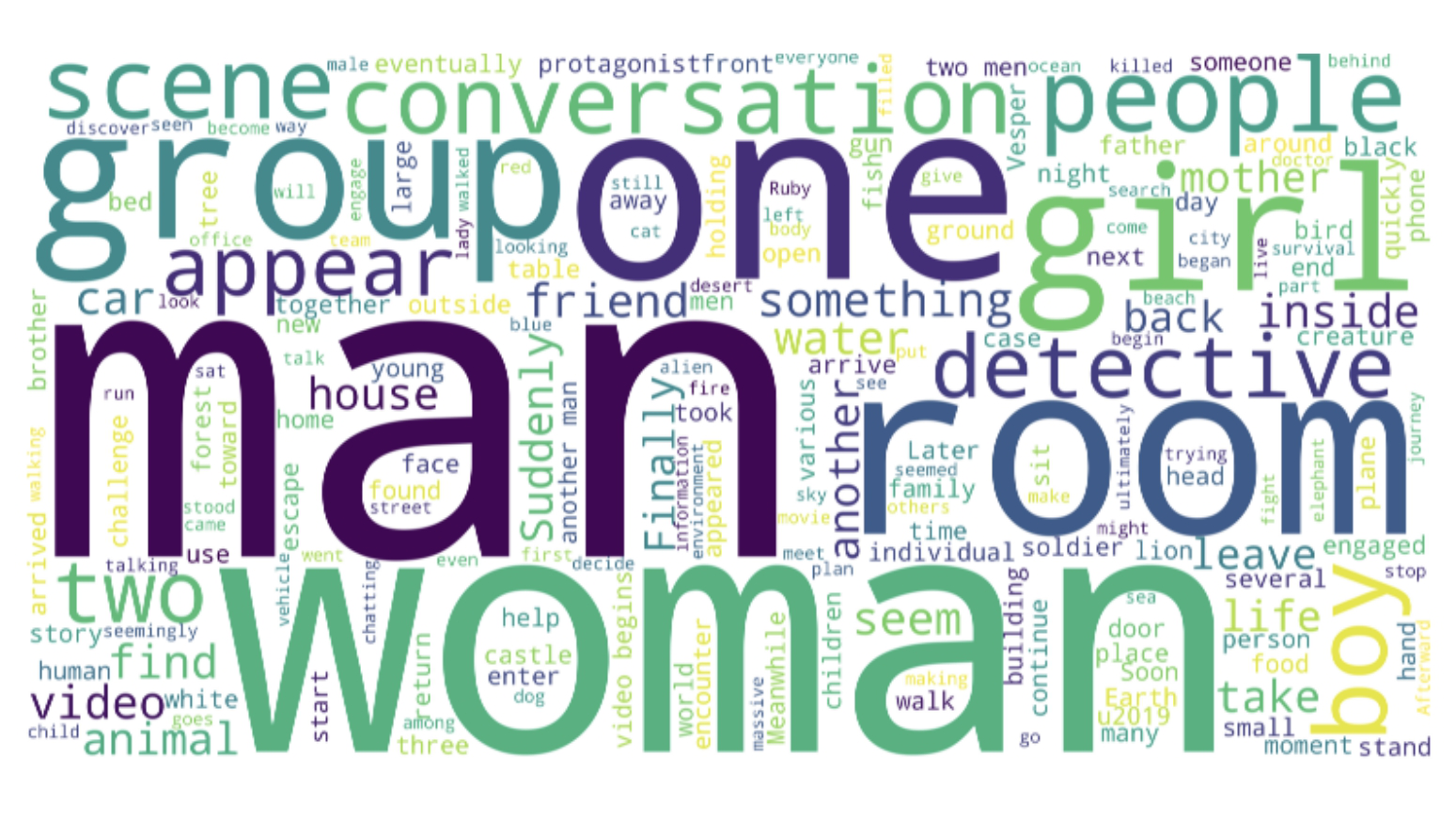

MovieChat: From Dense Token to Sparse Memory in Long Video Understanding

Enxin Song*, Wenhao Chai*†, Guanhong Wang*, Yucheng Zhang, Haoyang Zhou, Feiyang Wu, Haozhe Chi, Xun Guo, Tian Ye, Yanting Zhang, Yan Lu, Jenq-Neng Hwang, Gaoang Wang‡

Computer Vision and Pattern Recognition (CVPR), 2024

Project Page

|

Paper

|

Blog

|

Video

|

Dataset

|

Leaderboard

|

Code

MovieChat achieves state-of-the-art performace in extra long video (more than 10K frames) understanding by introducing memory mechanism.