About

Wenhao Chai

Wenhao Chai is currently a graduate student at University of Washington, with Information Processing Lab advised by Prof. Jenq-Neng Hwang. Previously, he was an undergradate student at Zhejiang University, with CVNext Lab advised by Prof. Gaoang Wang. He is fortunate to have internship at Multi-modal Computing Group, Microsoft Research Asia.

His research primarily in video understanding, generative models, embodied agent, as well as human pose and motion. Have a look at the overview of our research. All publications are listed here and in Google Scholar.

We are always looking for collaborators who have the same interests like us. Follow me on Twitter.

StableVideo

07/2023: We release StableVideo, a diffusion-based framework for text-driven video editing, which is accepted to ICCV 2023. The project repo has gained over 1.3k stars at GitHub.

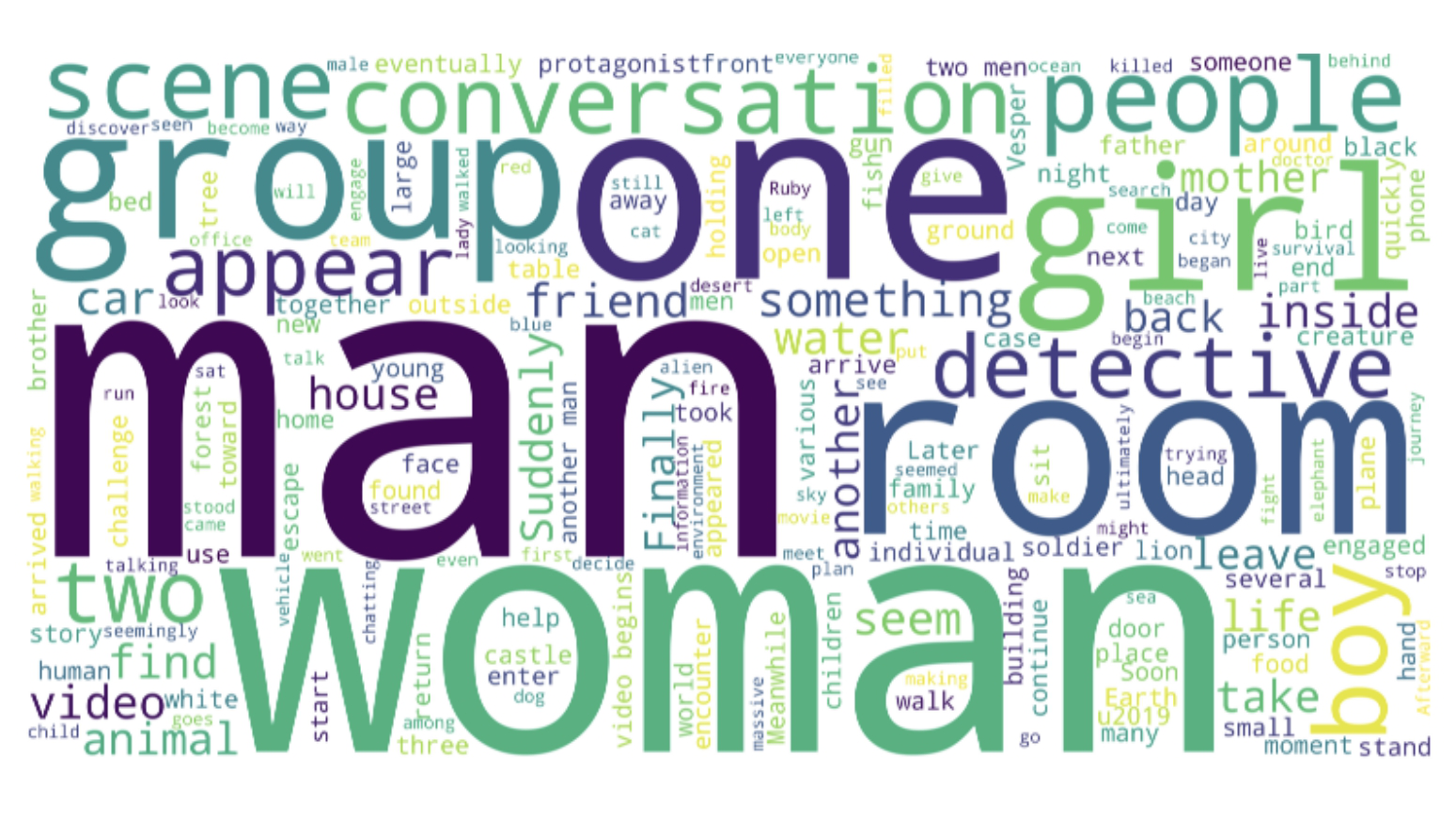

MovieChat

07/2023: We release MovieChat, the first framework that can chat with over ten thousands frames of video, accepted to CVPR 2024. We also host LOVEU: LOng-form VidEo Understanding challenge in CVPR 2024!

STEVE

12/2023: We release STEVE series, named after the protagonist of the game Minecraft, aims to build an embodied agent based on the vision model and LLMs within an open world.

CityGen

12/2023: We release CityGen, a novel framework for infinite, controllable and diverse 3D city layout generation.

Check Out

News and Highlights

- 04/2024: We are hosting CVPR 2024 Long-form Video Understanding Challenge @ LOVEU.

- 04/2024: Invited talk at AgentX seminar about our STEVE series works.

- 03/2024: One paper accepted to ICLR 2024 workshop @ LLM Agents.

- 02/2024: Two paper accepted to CVPR 2024 (1 highlight).

- 02/2024: Invited talk at AAAI 2024 workshop @ IMAGEOMICS.

- 01/2024: We are working with Pika Lab to develop next-generation video understanding and generation models.

- 12/2023: One paper accepted to ICASSP 2024.

- 12/2023: One paper accepted to AAAI 2024.

- 11/2023: Two paper accepted to WACV 2024 workshop @ CV4Smalls.

- 09/2023: One paper accepted to ICCV 2023 workshop @ TNGCV-DataComp.

- 09/2023: One paper accepted to IEEE T-MM.

- 08/2023: One paper accepted to BMVC 2023.

- 07/2023: Two paper accepted to ACM MM 2023.

- 07/2023: Finish my research internship at Microsoft Research Asia (MSRA), Beijing.

- 07/2023: Two paper accepted to ICCV 2023.

Recent

Projects

* Equal contribution. † Project lead. ‡ Corresponding author.

StableVideo: Text-driven Consistency-aware Diffusion Video Editing

Wenhao Chai, Xun Guo‡, Gaoang Wang, Yan Lu

International Conference on Computer Vision (ICCV), 2023

[Website]

[Paper]

[Video]

[Demo]

[Code]

We tackle introduce temporal dependency to existing text-driven diffusion models, which allows them to generate consistent appearance for the new objects.

MovieChat: From Dense Token to Sparse Memory in Long Video Understanding

Enxin Song*, Wenhao Chai*†, Guanhong Wang*, Yucheng Zhang, Haoyang Zhou, Feiyang Wu, Haozhe Chi, Xun Guo, Tian Ye, Yanting Zhang, Yan Lu, Jenq-Neng Hwang, Gaoang Wang‡

Computer Vision and Pattern Recognition (CVPR), 2024

[Website]

[Paper]

[Blog]

[Dataset]

[Code]

MovieChat achieves state-of-the-art performace in extra long video (more than 10K frames) understanding by introducing memory mechanism.